Making a Prototype Game

The process started with the briefing of using emerging, real-time technology to create an interactive experience. Over the course of 11 weeks, my team and I concepted, prototyped and pitched our idea for a game.

Game Design

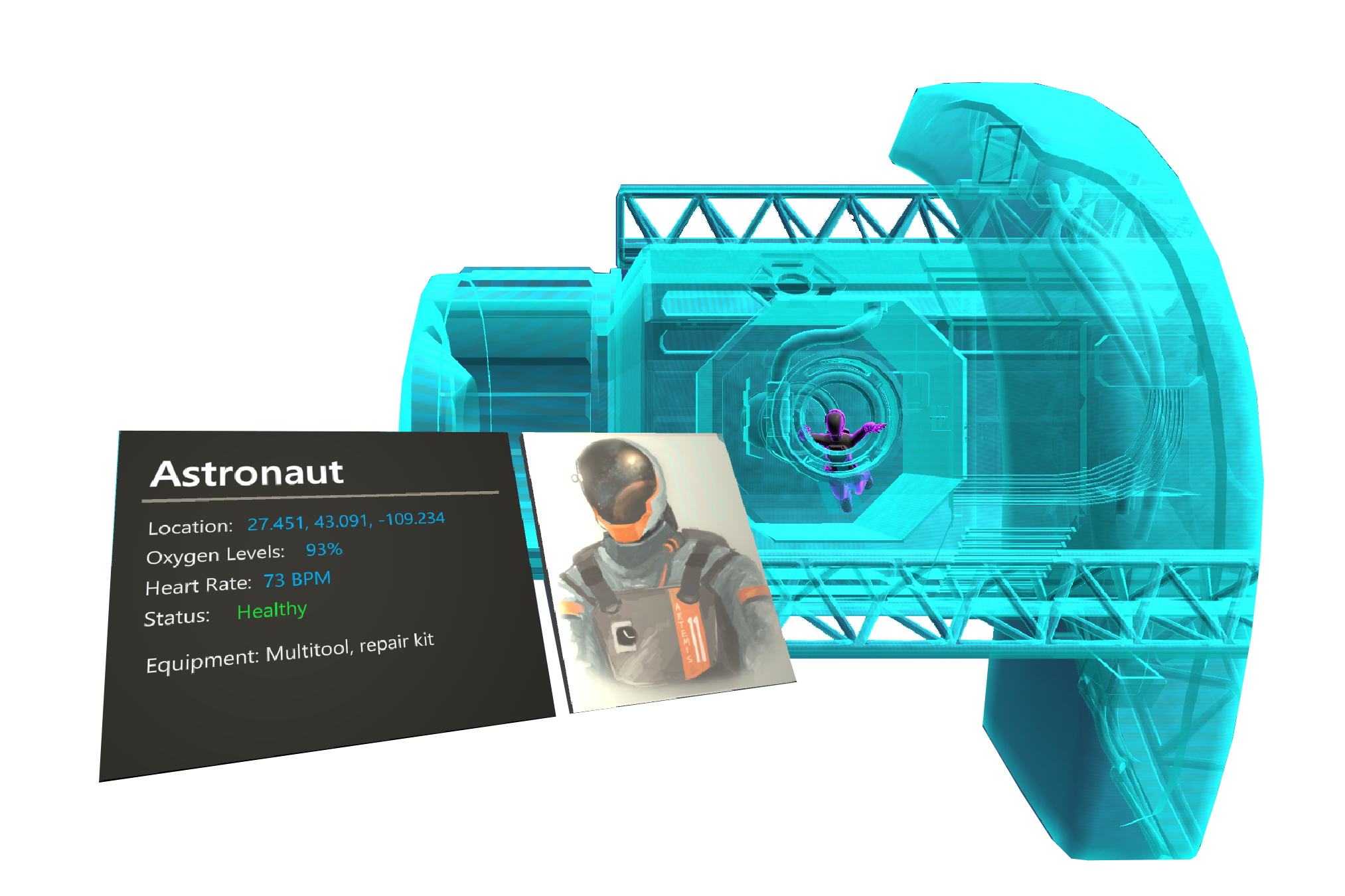

Artemis 11 is a cooperative mixed reality experience, where two players strategise to overcome the perils of space exploration.

One player wears a mixed reality headset (Microsoft Hololens) and sees a digitally augmented, physical 3D model in front of them. Their role is that of Mission Control, to manage and maintain the spacecraft, along with the lives of those under your command. The second player is in virtual reality (Oculus Rift) and takes on the role of an astronaut aboard the spacecraft. They are responsible for keeping the spacecraft functional and must ensure the ship makes the journey safely to Mars.

Mission Control has access to a number of different abilities, such as a shield generator to deflect incoming meteors, and sensor scans to detect other various threats. However, these components can become damaged, requiring the two players to work together to repair. Mission Control will instruct the astronaut on how to perform their tasks, but it must be done quickly, as threats are a common presence.

Our primary inspiration was the game Keep Talking and Nobody Explodes and its emphasis on communication. We wanted to explore the challenges with communication for two players who have access to information and abilities which the other player does not. However, we also wanted to give the players their own agency as various events could cut the players vocal communication. We drew further inspiration from the game Faster Than Light, and its control of systems aboard a spacecraft. Giving the players other strategic choices added to the intensity we wanted players to feel.

Art Design

There were two distinct states that influenced the design. The primary design was grounded in a realistic take on a future NASA mission. This design choice coincided with the 50 year anniversary of Apollo 11 at the time of development, so it seemed most fitting. Our ship design was inspired by the Lunar Orbital Platform-Gateway, a conceptual space station serving as a refuelling stopover for future space exploration. The astronaut player was represented as a futuristic take on a lightweight, mobile space suit as the character would need to quickly perform various zero-G maneuvers whilst on their spacewalks. We wanted both players to be present in 3D space in order to feel more connected, and so the AR players avatar was designed to be an autonomous drone.

As a creative content studio, we also wanted to introduce elements of the fantastical into real-life phenomena. Whenever the astronauts’ oxygen levels dropped too low, they would enter a state of hypoxia. This would introduce hallucinations into the experience, growing more intense until they reach a fail state, or return to the ship to safely recover.

Tech

The project was developed in Unity 2019.1.2f1. It was the engine we were most familiar with and felt we could achieve the most within the time we had.

To handle the multiplayer functionality there were a few different options. The simplest would be to use Unity’s default multiplayer systems UNet, however, at the time of development, it was in the process of being depreciated. The next best solution seemed to be Photon Engine. It is a cloud-based solution, and so was scalable, portable, and easily configurable. We were able to quickly set up positional tracking, voice communication and other shared data such as oxygen levels.

For VR locomotion, we used VRTK V4.0, which was in Beta at the time of development. I had familiarity with version 3, however, it had changed significantly in this release. During development documentation was very scarce beyond a few getting started tutorials and the Slack development channel. So the learning process was difficult, requiring significant time to experiment. This slowed progress, however, we were eventually able to get zero-g locomotion and custom interactables working. In hindsight, it would have been a better option to switch to Steam VR, especially as we were not able to get VRTK working with Unity’s new lightweight render pipeline (LWRP).

For MR development, we used Microsoft’s own development kit MRTK V2, as this was the only real viable option. Luckily this is very well documented and development was relatively easy. We were able to stream a Unity editor session to the headset and test out the multiplayer functionality quickly. The difficulty came from the fact that hand tracking and gestures would only work when building to the headset, which affected how much testing we were able to get done for their interactions.

The process

It started with three weeks of continual pitching and idea generation. Groups were randomly rotated to work out which teams work best together during this process. We came out of this with a solid foundation and a baseline of targets to hit, and the beginnings of the game design. The next few weeks were spent researching, developing the gameplay ideas further and storyboarding. Once some concepts had been developed, first-pass models were being blocked out and in engine multiplayer functionality was developed.

The design of the repair interactions was first made as a paper prototype, with one player communicating with the other over a mobile phone to explain how to solve the puzzle. We quickly realised that the puzzle needed to be simplified in order to be understood quickly. AR interaction design also started as a paper prototype. This was an effective approach due to UI creation being time-consuming, and as the interaction design already utilised the physical world with a 3D model ship.

This iterative process continued up until two weeks before the pitch date, when we locked down all assets and functionality. This was so that we could prepare the pitch trailer and presentation. We had aimed to get to this point a week earlier, however, it was difficult to effectively judge when it was up to a high enough standard. We had decided to go for a cinematic gameplay trailer, and so the extra week was invaluable for improving the visual fidelity. This approach did have its risks, as it took away an opportunity to more clearly explain the game.

The Result

My primary roles in this project were programming and game design. I feel like we accomplished a lot in this time, and I was pleased with what we managed to achieve for our first group game project.

Overall, I found this a hugely enjoyable process. Developing a prototype game with so many elements forced us to make quick decisions, move rapidly and really push ourselves. Whilst it is unfortunate that our project was not selected, I learnt a lot from the process and had a great time.

Huge thanks to my fellow teammates. I had a blast working on this project with you!

Concept art, look dev, game design: Jessica Lyon

3D modelling, surfacing, environment artist: Nick Refalo

3D modelling, surfacing, level design: Tom Scior

3D printing, generalist: Javier Amador

Animation, Interaction design: Nakul Umashankar

Producer: Adelaine Baltazar